Using Robotic Cameras and CG Animation to Take to the Skies (and Space)

befores & afters go behind the scenes of two UTS Animal Logic Academy shorts exploring the intersection between analogue and digital animation.

befores & afters go behind the scenes of two UTS Animal Logic Academy shorts exploring the intersection between analogue and digital animation.

Originally posted by before & afters

Combining real miniature sets, robotic motion control cameras and CG animation is a somewhat uncommon approach to producing an animated short. But that’s exactly what director Simon Rippingale did recently in a collaboration between the UTS Animal Logic Academy (ALA) and the Royal Australian Air Force (RAAF) on two projects–Jasper and Jarli. These engaging short films are aimed at encouraging female and indigenous participation and interest in the air force and space industries.

Rippingale started studying the Master of Animation and Visualisation and has since graduated with a PhD from the Academy. He pitched the story ideas to the RAAF to promote the air force and space programmes to women and to indigenous people in Australia. The director brought together a team that would combine traditional animation design with elaborate miniature sets shot with cameras positioned on robotic arms (sometimes more than one), and then add in CG animated characters.

Both Jasper and Jarli represent examples of Animal Logic and UTS’ commitment to pursuing innovation in the animation and VFX sectors through the Academy. Jarli, too, is an extension of UTS’ commitment to excellence in indigenous higher education and research. Here, Rippingale and art director Louis Pratt break down the process the team followed for befores & afters.

b&a: How did Jarli come about as a project?

Simon Rippingale: It started off with the Jasper project. I wanted to explore, for my PhD project, the idea of taking miniature sets with 3D characters further than we had in a previous project, A Cautionary Tale. It was all about: ‘How can we look at new technologies?’ The obvious place with that was robotics.

Then, at the same time as I was thinking about that, this guy whose name is Wing Commander Jerome from the Royal Australian Air Force (RAAF) came into UTS, and he said, ‘We want to convince women to fly aeroplanes, what do we do? You guys are creatives…’. I got up and pitched an idea. I said, ‘You want to get kids flying planes? You need a story, don’t preach to them, don’t do an ad. Kids love story.’

I pitched a story about a little girl who wanted to fly a plane, and there was no dialogue. I pitched it in a way that we could look at every shot as a moving camera, and it was very simple. It was just two minutes and it was under 20 shots. I wanted to put all the love into those 20 shots and see if we could get a nice look that way.

So that’s how Jasper got made. Then, the RAAF said, ‘Let’s do it again, but now we also want to reach out to the indigenous community. We want to get more indigenous people involved in the aerospace industry.’ I pitched another story and I got Chantelle Murray, who’s an indigenous director, involved and a couple of writers as well. We put this creative team together, and that’s how Jarli came about.

b&a: Louis, how did you come on board?

Louis Pratt: We were both completing our PhD’s at the Academy and as friends we’ve worked on a lot of film projects together. I was involved in set designs, and in helping to figure out a pipeline for the robotic camera moves. I got in touch with Evan Atherton, who was at the Autodesk Robotics Lab, and made this tool called Mimic–it’s a plugin to Maya–to help control industrial robots. We were using these big Kuka robot arms to hold the cameras.

The interesting thing about all that was, we could adjust the set design based on the abilities of these robot arms, because we knew they could do these huge, sweeping camera moves, and so the scale of the sets got bigger. In fact, the whole thing transformed at that point.

b&a: Just on the robot arms, how did they work?

Simon Rippingale: The idea stemmed from motion control. It’s obviously not a new thing, but motion control technology has been relatively exclusive to studio productions. The KUKA robotics means that you can take a very relatively common piece of equipment that’s used in manufacturing and in various industries, and apply it to filmmaking. Mimic enabled us to hook that up with 3D animation software that is common, industry standard 3D animation software. It took away some of that exclusivity.

b&a: How did you tackle concepts and storyboarding for Jarli?

Simon Rippingale: I did a fair amount of 3D mock-up using really primitive shapes. Mimic enabled us to download the 3D model of the KUKA we were going to use, and throw it into those layout scenes to give me some sense of the limitations. Then you could simulate a shot and say, ‘Well, the camera is not going to let us do that’, and change the set-up if needed.

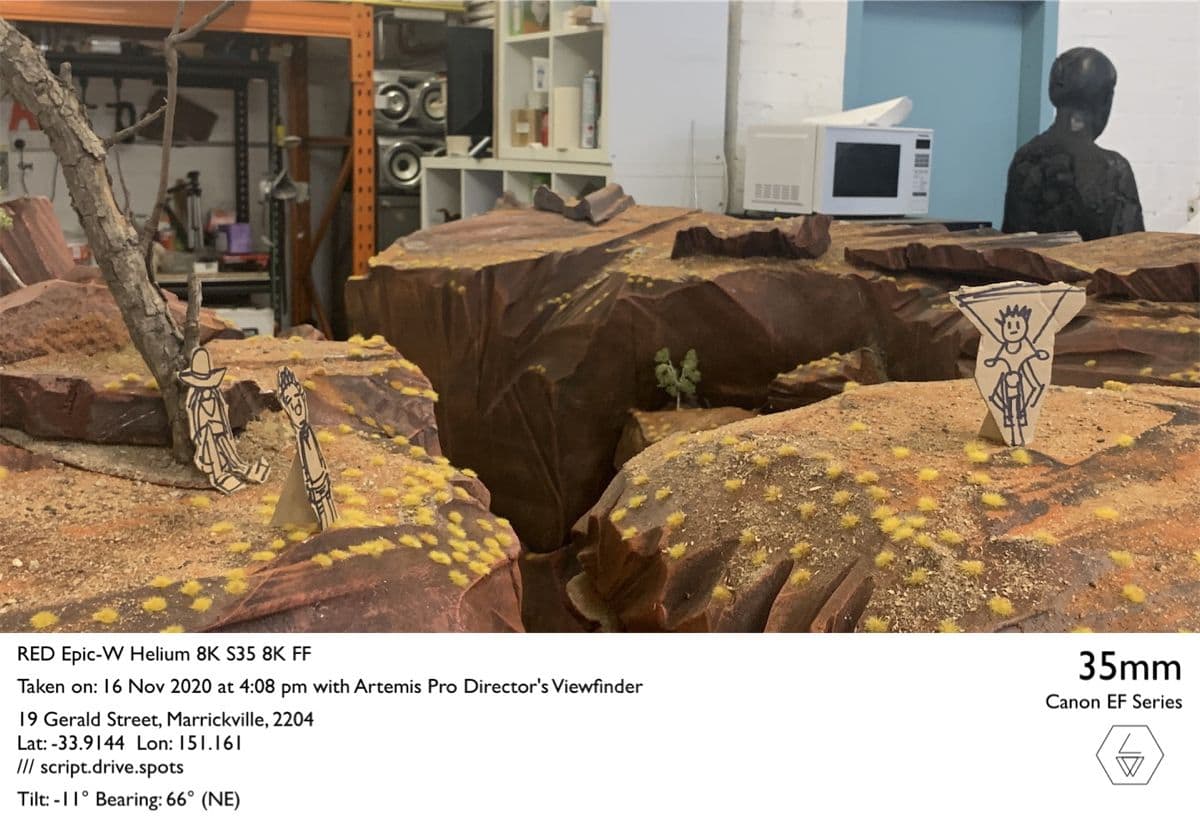

Louis Pratt: So Simon sketched out the basic shapes and then we got huge blocks of poly to build a whole canyon. We knew the reach of the robot arm, particularly once we got one robot arm on top of the other, so we could just hold out a tape measurer and we’d know we could get a lot of angles in a shot.

Simon Rippingale: Then I was doing walkthroughs with my phone and iPad using an app called Artemis, which lets you dial in the lenses. I can choose, say, a 50mm lens and walk through the shot and develop it that way.

b&a: It actually sounds like a pretty interesting approach to shot design.

Louis Pratt: Yes, I think one of the things that was particularly unique about the process that Simon did was that during shooting, he actually pulled apart the set and made a couple of different shots, and just added them in on the spot. In an animation pipeline, I’ve never even heard of that before.

Simon Rippingale: Honestly, that was one of the main interesting things about this project for me as a director, was using Mimic and a KUKA for motion control, and being able to be creative on the day. In the live-action world, often you’ll get to set and the director and filmmakers decide to just shoot from a different place or a different way. In the animation world, that degree of flexibility doesn’t always happen because you are sticking to boards and animatics, because animators are already animating to that camera, or for a multitude of reasons.

Louis Pratt: We also had a great pipeline going from Maya and Mimic to the KUKA enabling us to update the robot in seconds. When we had previously done it without that approach, it could sometimes take hours.

b&a: Can you tell me more about the approach to the miniature set construction?

Louis Pratt: A lot of the materials were real materials. One of the things that worked really well on a lot of the sets was just getting some red desert snake sand in there, and mixing it with glue. We talked about using sand much like a painter uses paint.

Simon Rippingale: I’d never really worked with sand before, it’s a really nice material. It gives you this great weathered feeling to shapes. It was a fun material to play with, and you just wouldn’t get that building a world in a computer like that.

Louis Pratt: It’s actually probably why we were able to produce all the sets so quickly. You could mix different sand colours, spray it down and, bang, ‘Oh work, it looks good, it looks so real.’

b&a: This is obviously a hybrid production, meaning you were shooting backgrounds, and then animating CG characters. What did the visual effects side of the shoot involve, in terms of anything standing in for the characters during filming?

Simon Rippingale: The simple answer is, we just figured out where the focal point was, and we’d shoot every path with something to focus on like a tennis ball or grey balls. With more time and money, I would invest more time on rough grayscale 3D prints of each character for each shot, that you could put in front of the camera for lighting reference. A tennis ball was almost enough, but we could take it further.

We also scanned our sets with an Artec Leo. The 3D scans were used to give us a very accurate layout. The animators could be animating a character on the edge of a cliff, for example, and with the scan they had a pretty accurate to-scale digital version of the cliff that they could be working to without having to guess the camera movement.

b&a: There’s something about the ‘look’ of Jarli which is very interesting, which comes from combining real sets with CG animation. What’s your take on that?

Simon Rippingale: That’s my whole bag, really, to be honest with you. That’s the whole reason I do these crazy projects. I don’t think there’s many people doing this. I’m not the only one there, The Gruffalo does it, for example, and there’s been a few productions that have gone down this road and attempted this hybrid style, but most people shy away from it. It’s a niche approach.

I like the look. I don’t want to be a stop-motion animator because it’s just too hard. You’ve got to do all that wire rig removal at the end and you’ve got to do all that face replacement, and you’ve got to get rid of all the edges. It’s a huge undertaking.

And then I love computer generated animation, but I also love coming into the studio with Louis and other people and getting away from the computer. Walking around and getting dirty and making things and talking about them.

It’s just my happy place. I love miniatures and I find them so fun to work on. You turn the music up, it’s so creative. I think people are really drawn to miniatures, there’s something enigmatic about a miniature set. If you have a miniature set and you get 20 people in the room with the set, they are really drawn to it and they lean over and they look in, and their imagination is triggered. They say, ‘What is the story here?’ They even start making their own little stories in their head. I think there’s something really enigmatic about them just as objects, they’re beautiful things.

The UTS Animal Logic Academy is a collaboration between the University of Technology Sydney and one of Australia’s leading animation studios, Animal Logic. The Academy offers an accelerated 1-year Master of Animation and Visualisation, a 14 week Graduate Certificate as well as Masters by Research and PhD opportunities. Enrolments for 2023 are now open.

The UTS Animal Logic Academy is also proud to announce that in collaboration with their industry partner Animal Logic, they will be offering up to 2 scholarships for domestic students completing the Master of Animation and Visualisation in 2023.

The Animal Logic Scholarship provides financial support to talented individuals from under-represented groups or those experiencing financial hardship in order to enable them to participate in postgraduate study in the animation, visual effects and visualisation industries, by offering $18,000 to assist with living costs and/or tuition fees.

To find out more about The Animal Logic Scholarship, please join Ian Thomson (Head of Animal Logic Academy), Alex Weight (Creative Lead) and Karen Bennett (Global Head of HR, Animal Logic), as they discuss the background behind scholarship, as well as eligibility criteria and how to apply.

And for full scholarship details, please visit the Animal Logic Scholarship page here.