Ryan Benno , a Senior Environment Artist at Insomniac Games, created a thread on Twitter that talks about the history of texturing in 3D video games. In the thread on Twitter, he shares interesting facts from the history of video games.

We have come a long way since the first days of using 3D in real time in home consoles, but there are still practices used to create game textures that go back to those times.

Let's start with the basics. Real-time rendering and prerender. Most 3D games use the first one when your machine draws an image in real time.

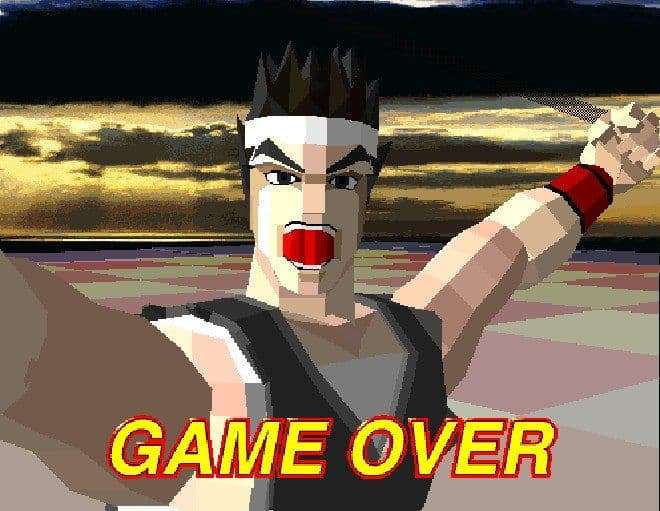

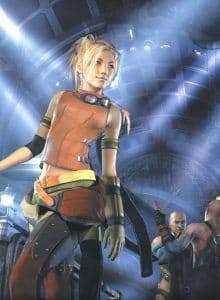

As a result, at the output you will receive different levels of quality. Interactivity requires real-time processing. But static (cinematics, static backgrounds) can be rendered in advance. The differences were overwhelming. Compare the pre-rendered background and the character drawn in the rial half of 1999.

Prerender allows you to use a lot of "expensive" rendering chips that can be rendered for several hours or days. And it is convenient for images or movies. But games should play at a speed of 30-60 frames per second all the time.

On the one hand, you had Star Fox as an early 3D real-time example on 16-bit consoles, on the other, Donkey Kong Country used a pre-rendered CG converted into sprites (with a very simplified color palette). For a long time rendering in real time could not achieve a similar result.

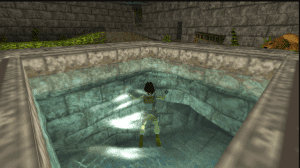

With the advent of suitable 3D consoles, such as the N64 and PS1, we saw what rendering in real time didn’t allow. You cannot use light sources for baking shadows or light sources in the scene, there is no material response, no embossed texturing, and low resolution geometry and textures. How did the artists get around this?

Someone pushed information about lighting directly into textures (shadows, highlights, depth). The shadows of the players usually represented a simple texture that followed the character. You have not had the opportunity to cast the correct shadow.

You might get basic shading on models, but this was usually deprived of the correct lighting information. Things like OoT or Crash used a lot of light information in their textures and drawing vertices on the geometry to make the areas look lighter / darker or colored in a certain color.

At that time, tremendous creative work was done to overcome these limitations. Drawing or placing information about lighting in textures is still used at different levels. But with the growth of real-time rendering capabilities, such old techniques are less used.

The next generation of PS2, Xbox and Gamecube consoles will try to help solve some of the problems.

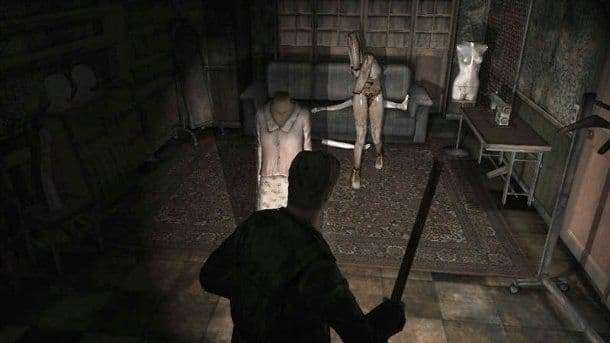

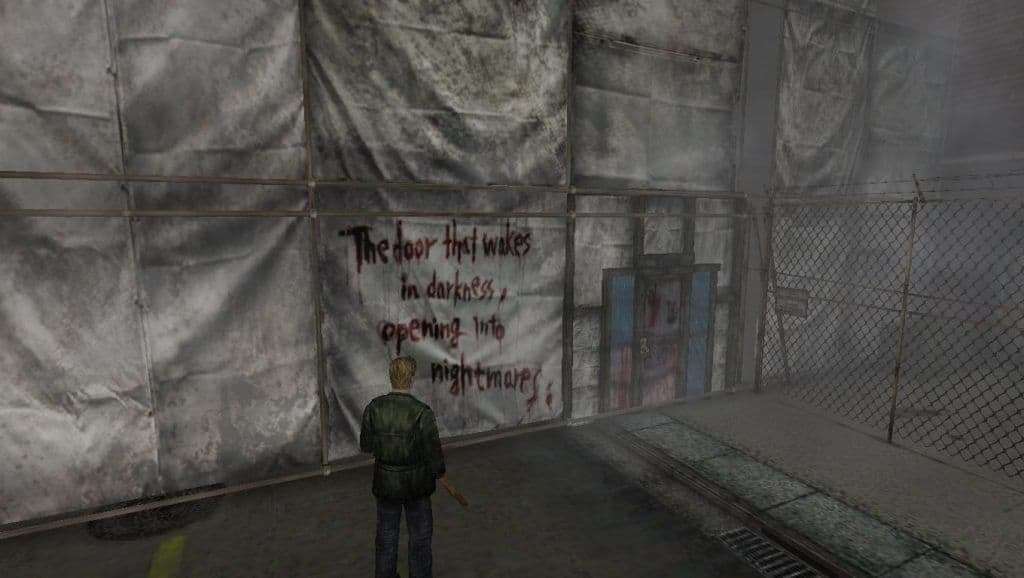

Silent Hill 2 was one of the defining games. The biggest breakthrough in 2001 was the use of real-time shadows. Some lighting information that was previously placed in textures could have been removed, but for most of the games of this generation they were still used extensively.

Increased resolution allowed to improve the picture. Because of the larger number of pixels, you can store much more detail in them. Mirroring was rare; there was no proper response from the material.

This is another reason why baking information into textures was still common. This was not a problem in the pre-dealer. The fabric looked more real. Glass, hair and skin could look believable. Rial time needed a push. And they got it at the end of the generation (xbox only).

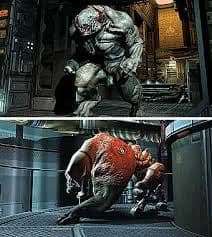

Highlight and normal maps appeared on the scene in games such as Halo 2 and Doom 3 . Glare maps allow surfaces to react to light more realistically, the metal has become brilliant. Normals allow you to add more detail to features with such a low number of polygons.

If you work with 3d, you know what a normal map is, but for those who do not know this, this is a type of relief display that allows surfaces to react to light with more detail.

Many game artists have changed the way they create textures. With normal maps, you had to spend significantly more time creating assets. Sculpting tools, such as ZBrush, have become the norm when creating high-poly models that are baked into texture for use in low-poly.

Prior to this, many textures were either hand-painted or stitched together in Photoshop. With the era of 360 and PS3, this way of creating textures is a thing of the past in many games with increasing resolution.

We also understood how materials with prerender shading work. This changed the rules of the game for many artists. Materials have become much more complicated than before. This 2005 demo was unlike anything seen at that time. 360 has not even come out.

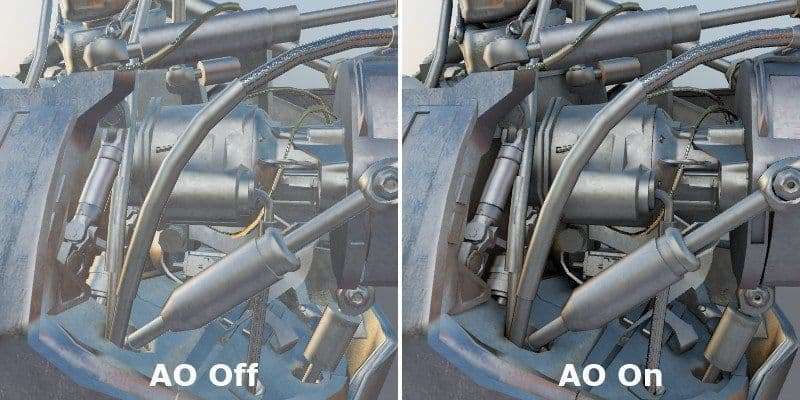

We also met a new way of lighting the scene. Ambient occlusion . It was too expensive for real-time rendering, so artists just added it to the textures! AO recreates indirect shadows from light.

Even today, real-time AO is not 100% real time, but it's close! All thanks to SSAO or DFAO. Baked AO cards are still in use today, but are likely to disappear someday when the renders get even better.

Summarizing, we can say that with the era of PS3 and X360 we saw a much higher resolution than in the previous generation, and new textures for the shadows. And of course, improved lighting! You can get real-time shadows for the whole scene or bake the lighting for more detailed details.

It's pretty cool, right? Well, there were still some flaws. Low resolutions on models and textures, plus a lot of overhead for new shaders.

Another problem is the glare map. You had one map of how brilliant the object was. Materials do not feel real. Some people began to share glare maps. Some of them are the developers of Bioshock Infinite.

Glare maps can now be divided between one type of material (wood, gold, concrete, etc.) and the “age” of the material (scratches, wear, etc.). This coincided with a new type of shading model, Physically Based Rendering (PBR).

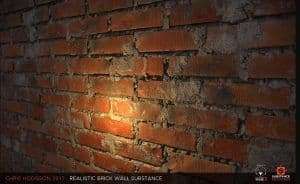

This led us to the present day and the current generation. PBR has become the standard for many games. The technique popularized by Pixar standardized the way in which plausible materials were created in computer graphics. And it can be applied in real time!

On top of that, the industry has built technologies based on previous generations. Things like tone mapping and color grading have been improved in the current generation. That, on which in the previous generation you would spend forever, adjusting the texture.

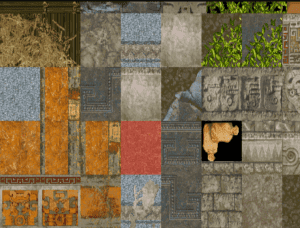

In conclusion, I will show you what textures would be required to create an early 3D game, and what is needed to create one set of textures in today's game.

I would like to add something else. Games made by people! Technologies and processes are constantly changing, but we still need to appreciate the masters of their era. Appreciate the story!